As artificial intelligence and machine learning systems become increasingly integrated into critical business operations, cybersecurity professionals face a new frontier of threats that extend beyond traditional attack vectors. Adversarial Machine Learning (AML) represents a sophisticated domain of cyber threats where malicious actors specifically target the vulnerabilities inherent in machine learning algorithms and models. Unlike conventional cybersecurity threats that exploit network or application vulnerabilities, adversarial machine learning attacks manipulate the very foundation of intelligent systems — their ability to learn, adapt, and make decisions.

The Australian Cyber Security Centre’s 2023-2024 Annual Cyber Threat Report1 highlights the emerging concern around adversarial inputs in AI systems, noting that “malicious cyber actors may be able to provide it with specially crafted inputs or prompts to force it to make a mistake, such as by generating sensitive or harmful content”. This recognition by Australia’s premier cybersecurity authority underscores the critical importance of understanding and defending against these sophisticated attack methodologies.

The threat landscape has evolved significantly, with Microsoft’s security research revealing that “cyberattacks against machine learning systems are more common than you think“2 and that attackers often combine traditional techniques like phishing with adversarial ML methods to achieve their objectives. This convergence of conventional and AI-specific attack vectors creates a complex security challenge that demands immediate attention from cybersecurity professionals and organizational leaders.

Understanding Adversarial Machine Learning

Adversarial Machine Learning encompasses the systematic study of attacks against machine learning algorithms and the development of defenses to counter such threats. At its core, AML exploits the fundamental characteristics of how machine learning models process and interpret data, creating opportunities for manipulation that can have far-reaching consequences across various industries and applications.

The field gained significant academic attention following early demonstrations where researchers showed that subtle, often imperceptible modifications to input data could cause sophisticated machine learning models to make dramatic misclassifications. These adversarial examples exposed a critical vulnerability: the gap between how humans and machines perceive and process information. While a human might not notice a few pixel changes in an image, these modifications can cause an AI system to completely misidentify the object, with potentially catastrophic results in safety-critical applications.

Google’s research “Adversarial examples are not bugs, they are features”3 on adversarial machine learning at scale demonstrates the transferability of these attacks, explaining that “adversarial examples are malicious inputs designed to fool machine learning models. They often transfer from one model to another, allowing attackers to mount black box attacks without knowledge of the target model’s parameters”. This transferability significantly amplifies the threat, as attackers can develop adversarial examples using publicly available models and successfully deploy them against proprietary systems.

The sophistication of these attacks extends beyond simple input manipulation. Microsoft’s comprehensive analysis in “Adversarial ML Threat Matrix”4 reveals that “model stealing is not the end goal of the attacker but in fact leads to more insidious model evasion”, indicating that threat actors employ multi-stage attack strategies where initial reconnaissance and model extraction serve as stepping stones to more damaging objectives.

Primary Categories of Adversarial Attacks

Evasion Attacks

Evasion attacks represent the most commonly discussed form of adversarial machine learning threats. These attacks occur during the inference phase when an AI system is actively processing data and making decisions. Attackers craft malicious inputs designed to cause the model to misclassify or make incorrect predictions while maintaining the appearance of legitimate data to human observers.

In practice, evasion attacks can manifest across various domains. In cybersecurity applications, malware authors might modify their code to evade detection by machine learning-based antivirus systems while preserving the malicious functionality. In autonomous vehicle systems, subtle modifications to traffic signs could potentially cause misinterpretation by computer vision systems, leading to dangerous driving decisions.

The effectiveness of evasion attacks stems from the high-dimensional nature of machine learning decision boundaries. While these boundaries might appear robust in aggregate, they often contain numerous local vulnerabilities that sophisticated attackers can exploit. The challenge for defenders lies in the fact that these vulnerabilities are often impossible to identify through conventional testing methods.

Data Poisoning Attacks

Data poisoning represents a more insidious form of adversarial attack that targets the training phase of machine learning model development. The Australian Cyber Security Centre in “Engaging with Artificial Intelligence (AI)”5, specifically warns about this threat, explaining that “data poisoning involves manipulating an AI model’s training data so that the model learns incorrect patterns and may misclassify data or produce inaccurate, biased or malicious outputs”.

Unlike evasion attacks that require ongoing interaction with deployed systems, data poisoning attacks can be executed during the model development phase, making them particularly dangerous because they embed vulnerabilities directly into the model’s learned behavior. These attacks can be especially effective in scenarios where organizations rely on crowdsourced data, publicly available datasets, or third-party data providers without sufficient validation mechanisms.

The long-term impact of data poisoning attacks extends beyond immediate security concerns. Compromised models may exhibit biased behavior, reduced accuracy on legitimate inputs, or hidden backdoors that activate under specific conditions. These effects can persist throughout the model’s operational lifetime, making detection and remediation particularly challenging.

Model Extraction Attacks

Model extraction attacks focus on stealing the intellectual property and operational knowledge embedded within machine learning systems. Attackers systematically query target models to reverse-engineer their decision-making processes, extract training data characteristics, or create functional replicas of proprietary algorithms.

Microsoft’s security framework in “Adversarial ML Threat Matrix”6 recognizes the strategic nature of these attacks, noting that model extraction often serves as a precursor to more sophisticated threats rather than an end goal. Once attackers successfully extract or approximate a target model, they can use this knowledge to develop more effective evasion techniques, identify specific vulnerabilities, or create competing systems that undermine the original model’s commercial value.

The threat extends beyond traditional intellectual property concerns. In sectors such as financial services, healthcare, or national security, model extraction could reveal sensitive information about institutional decision-making processes, risk assessment methodologies, or strategic priorities that adversaries could exploit for competitive advantage or malicious purposes.

Byzantine Attacks

Byzantine attacks target federated learning environments where multiple parties collaborate to train machine learning models without sharing raw data. These distributed learning systems, while offering privacy advantages, create new attack surfaces where malicious participants can influence the global model by contributing corrupted updates or gradient information.

The distributed nature of federated learning makes Byzantine attacks particularly challenging to detect and mitigate. Malicious participants can employ sophisticated strategies to mask their attacks, such as alternating between benign and malicious contributions or coordinating with other compromised nodes to amplify their influence on the global model.

Statistical Overview of Adversarial ML Threats

Current research indicates that the threat landscape for adversarial machine learning is expanding rapidly. Academic surveys from 2020 such as “Machine Learning Security in Industry: A Quantitative Survey“7, revealed practitioners’ widespread concern about the need for better protection of machine learning systems in industrial applications, highlighting a significant gap between the deployment of AI systems and the implementation of adequate security measures.

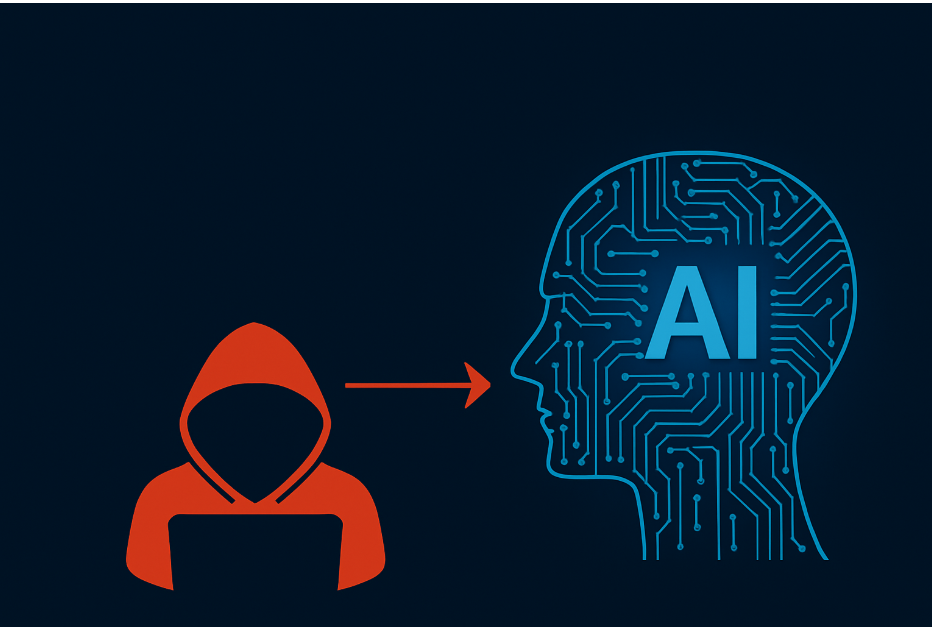

The transferability of adversarial examples presents a particularly concerning statistic for security professionals. A research study by Eindhoven University of Technology, titled “Direction-Aggregated Attack for Transferable Adversarial Examples,” 8demonstrates that adversarial examples crafted against one model successfully fool other models in 60-90% of cases, depending on the similarity of architectures and training methodologies. This high transfer rate means that attackers can develop and test their attacks against publicly available or surrogate models before deploying them against protected systems.

Microsoft’s analysis of real-world threats reveals that machine learning attacks are increasingly integrated with traditional cyber attack methodologies. Rather than operating as isolated techniques, adversarial ML methods are becoming components of broader attack campaigns that combine social engineering, lateral movement, and persistence mechanisms with AI-specific exploitation techniques.

Industry-Specific Threat Scenarios

Financial Services

The financial services sector faces unique adversarial ML risks due to the widespread adoption of algorithmic trading, fraud detection, and credit scoring systems. Adversarial attacks against these systems could enable sophisticated fraud schemes, market manipulation, or discriminatory lending practices that violate regulatory requirements.

Credit scoring models present particularly attractive targets for adversarial manipulation. Attackers might develop techniques to artificially inflate credit scores by understanding and exploiting the specific features and decision boundaries used by lending algorithms. Similarly, high-frequency trading systems that rely on machine learning for market prediction could be targeted with adversarial market data designed to trigger disadvantageous trading decisions.

Healthcare and Medical Imaging

Healthcare applications of machine learning, particularly in medical imaging and diagnostic systems, present critical safety concerns when subjected to adversarial attacks. The high-stakes nature of medical decision-making means that even small-scale adversarial manipulations could have life-threatening consequences.

Medical imaging systems that rely on deep learning for diagnosis could be targeted with adversarial examples designed to hide pathological conditions or create false positive diagnoses. The subtle nature of many adversarial perturbations means that these attacks might be undetectable to human radiologists reviewing the same images, creating dangerous scenarios where critical medical conditions go undiagnosed or unnecessary treatments are prescribed.

Autonomous Systems and Transportation

The deployment of machine learning in autonomous vehicles, drones, and other safety-critical systems creates high-profile targets for adversarial attacks. The physical nature of these systems means that successful attacks could result in property damage, injury, or loss of life, making them attractive targets for both criminals and state-sponsored actors.

Computer vision systems used in autonomous vehicles rely heavily on accurate interpretation of road signs, traffic signals, and environmental conditions. Adversarial attacks against these systems could involve physical modifications to road infrastructure or the deployment of specially designed objects that cause misinterpretation by autonomous systems while appearing normal to human drivers.

Defense Strategies and Mitigation Approaches

Adversarial Training

Adversarial training represents one of the most widely adopted defensive strategies against adversarial machine learning attacks. This approach involves deliberately exposing machine learning models to adversarial examples during the training process, enabling them to develop robustness against similar attacks during deployment.

Google’s research on adversarial training at scale demonstrates that “adversarial training is the process of explicitly training a model” to handle malicious inputs. However, this defensive technique comes with significant computational costs and may reduce the model’s performance on benign inputs, creating a trade-off between security and functionality that organizations must carefully manage.

The effectiveness of adversarial training depends heavily on the diversity and sophistication of the adversarial examples used during training. Models trained against simple attack methods may remain vulnerable to more sophisticated techniques, requiring ongoing updates to training datasets as new attack methodologies emerge.

Input Validation and Preprocessing

Robust input validation and preprocessing mechanisms serve as the first line of defense against adversarial attacks. These techniques focus on detecting and neutralizing potentially malicious inputs before they reach the core machine learning algorithms.

Statistical analysis of input distributions can help identify inputs that deviate significantly from expected patterns, potentially indicating adversarial manipulation. However, sophisticated attackers may develop techniques to evade detection by ensuring their adversarial examples fall within expected statistical ranges while still causing model misclassification.

Preprocessing techniques such as input compression, noise reduction, or feature transformation can help neutralize adversarial perturbations while preserving the essential characteristics of legitimate inputs. The challenge lies in selecting preprocessing methods that effectively counter adversarial manipulations without degrading the system’s performance on legitimate use cases.

Ensemble Methods and Diversity

Ensemble approaches that combine multiple machine learning models can provide increased robustness against adversarial attacks. The principle underlying this defensive strategy is that adversarial examples that successfully fool one model are less likely to transfer effectively to multiple diverse models simultaneously.

IBM’s research in “Adversarial Robustness: From Self-Supervised Pre-Training to Fine-Tuning”9 on adversarial robustness emphasizes the importance of diversity in ensemble defenses. Models trained with different architectures, datasets, or training methodologies are more likely to exhibit diverse vulnerabilities, making it challenging for attackers to develop adversarial examples that successfully exploit all ensemble members simultaneously.

However, recent research suggests that sophisticated attackers can develop ensemble-specific attacks that target the collective decision-making process of multiple models. This evolution in attack sophistication requires corresponding advances in ensemble defense mechanisms, including dynamic model selection and adaptive voting strategies.

Monitoring and Anomaly Detection

Continuous monitoring systems that can detect unusual patterns in model behavior provide crucial early warning capabilities for adversarial attacks. These systems analyze various metrics including prediction confidence, input-output relationships, and model performance statistics to identify potential security incidents.

Microsoft’s security framework in “Secure AI – Process to secure AI”10 emphasizes the importance of comprehensive monitoring, noting that “attackers can steal, poison, or reverse-engineer AI models and datasets”, requiring organizations to implement monitoring systems that can detect these various attack vectors. Effective monitoring systems must balance sensitivity to genuine security threats with tolerance for legitimate variations in system behavior.

The challenge of anomaly detection in machine learning systems lies in distinguishing between adversarial attacks and legitimate edge cases or distribution shifts in input data. Organizations must develop sophisticated baselines and adaptive thresholds that can accommodate normal operational variations while reliably detecting malicious activities.

Regulatory and Compliance Considerations

The emergence of adversarial machine learning threats is prompting regulatory bodies worldwide to develop new guidelines and requirements for AI system security. Organizations deploying machine learning systems must now consider not only traditional cybersecurity compliance requirements but also AI-specific security standards and best practices.

The Australian Cyber Security Centre’s guidance “Engaging with Artificial Intelligence (AI)”11 on engaging with artificial intelligence provides a framework for organizations to assess and manage AI-related security risks. This guidance emphasizes the importance of understanding the specific vulnerabilities associated with different types of AI systems and implementing appropriate security controls throughout the AI system lifecycle.

Compliance frameworks increasingly require organizations to demonstrate not only the accuracy and fairness of their AI systems but also their robustness against adversarial attacks. This evolution in regulatory expectations means that adversarial ML security is becoming a business necessity rather than merely a technical consideration.

Future Trends and Emerging Threats

The landscape of adversarial machine learning is rapidly evolving, with new attack methodologies and defense mechanisms emerging regularly. The integration of large language models and generative AI systems into business processes creates new categories of adversarial threats that extend beyond traditional classification and prediction tasks.

Prompt injection attacks against large language models represent a new frontier in adversarial ML, where attackers craft input prompts designed to manipulate the behavior of AI systems in unintended ways. These attacks can cause AI systems to generate harmful content, reveal training data, or bypass safety restrictions, creating new categories of security vulnerabilities that organizations must address.

The democratization of AI development tools and the availability of powerful pre-trained models also lower the barrier to entry for adversarial attack development. As machine learning becomes more accessible to non-experts, the potential for both intentional and accidental adversarial vulnerabilities increases, requiring more robust defensive measures across the entire AI ecosystem.

Organizational Risk Management

Effective management of adversarial ML risks requires organizations to integrate AI security considerations into their broader cybersecurity and risk management frameworks. This integration involves conducting AI-specific risk assessments, developing incident response procedures for AI-related security events, and establishing governance structures that can adapt to the rapidly evolving threat landscape.

Organizations must also consider the reputational and legal implications of adversarial attacks against their AI systems. In industries such as healthcare, finance, or autonomous systems, successful adversarial attacks could result in significant liability, regulatory penalties, or loss of public trust that extends far beyond the immediate technical impact.

The challenge of adversarial ML risk management is compounded by the rapid pace of AI development and deployment within many organizations. Security teams must develop capabilities to assess and mitigate risks for AI systems that may be developed by teams with limited security expertise or deployed in environments where traditional security controls may not be applicable.

Implementation Roadmap for Organizations

Organizations seeking to address adversarial ML threats should begin with a comprehensive assessment of their current AI deployments and associated security risks. This assessment should identify all machine learning systems in use, evaluate their exposure to different categories of adversarial attacks, and prioritize security investments based on risk levels and business impact.

The development of AI security capabilities should follow a phased approach that begins with basic defensive measures such as input validation and monitoring, progresses to more sophisticated techniques like adversarial training and ensemble methods, and ultimately incorporates advanced research-based defenses as they mature and become practical for operational deployment.

Training and awareness programs play a crucial role in building organizational capacity to address adversarial ML threats. Technical teams need specialized knowledge about AI security vulnerabilities and defense mechanisms, while business stakeholders require understanding of the strategic implications and risk management considerations associated with adversarial attacks.

Conclusion

Adversarial Machine Learning represents a critical evolution in the cybersecurity threat landscape that demands immediate attention from organizations deploying AI systems. The sophisticated nature of these attacks, combined with their potential for significant business and safety impacts, makes them a priority concern for cybersecurity professionals and business leaders alike.

The convergence of traditional cyber attack methodologies with AI-specific techniques creates complex security challenges that require new defensive approaches and organizational capabilities. As demonstrated by recent research from Microsoft, Google, IBM, and the Australian Cyber Security Centre, the threat is both real and rapidly evolving, demanding proactive rather than reactive security strategies.

Success in defending against adversarial ML threats requires a comprehensive approach that combines technical security measures with robust governance frameworks, continuous monitoring capabilities, and ongoing investment in security research and development. Organizations that take a proactive stance in addressing these challenges will be better positioned to realize the benefits of AI technology while managing the associated security risks.

The future of AI security will likely see continued evolution in both attack and defense capabilities, making it essential for organizations to maintain adaptive security strategies that can respond to emerging threats. By building strong foundations in adversarial ML security today, organizations can prepare themselves for the challenges and opportunities that lie ahead in the AI-driven future.

References

- Australian Cyber Security Centre (ACSC), “2023-2024 Annual Cyber Threat Report”, https://www.cyber.gov.au/about-us/view-all-content/reports-and-statistics/annual-cyber-threat-report-2023-2024 ↩︎

- Microsoft, “cyberattacks against machine learning systems are more common than you think”, 2020 https://www.microsoft.com/en-us/security/blog/2020/10/22/cyberattacks-against-machine-learning-systems-are-more-common-than-you-think/ ↩︎

- Ilyas et al., Google, “Adversarial examples are not bugs, they are features”, 2019 https://arxiv.org/abs/1905.02175 ↩︎

- Microsoft, “Adversarial ML Threat Matrix”, https://github.com/mitre/advmlthreatmatrix ↩︎

- Australian Cyber Security Centre (ACSC), “Engaging with Artificial Intelligence (AI)”, https://www.cyber.gov.au/sites/default/files/2024-01/Engaging%20with%20Artificial%20Intelligence%20%28AI%29.pdf ↩︎

- Microsoft, “Adversarial ML Threat Matrix”, https://github.com/mitre/advmlthreatmatrix ↩︎

- Papernot, N., et al., “Machine Learning Security in Industry: A Quantitative Survey”, https://arxiv.org/abs/2207.05164 ↩︎

- Eindhoven University of Technology, “Direction-Aggregated Attack for Transferable Adversarial Examples”, 2019 https://pure.tue.nl/ws/portalfiles/portal/271133475/3501769.pdf ↩︎

- IBM, “Adversarial Robustness: From Self-Supervised Pre-Training to Fine-Tuning”, https://research.ibm.com/publications/adversarial-robustness-from-self-supervised-pre-training-to-fine-tuning ↩︎

- Microsoft, “Secure AI – Process to secure AI”, 2025 https://learn.microsoft.com/en-us/azure/cloud-adoption-framework/scenarios/ai/secure ↩︎

- Australian Cyber Security Centre (ACSC), “Engaging with Artificial Intelligence (AI)”, https://www.cyber.gov.au/sites/default/files/2024-01/Engaging%20with%20Artificial%20Intelligence%20%28AI%29.pdf ↩︎

At Christian Sajere Cybersecurity and IT Infrastructure, we understand the critical importance of protecting your AI systems from sophisticated adversarial threats. Our specialized expertise in adversarial machine learning security helps organizations build robust defenses that keep pace with evolving attack methodologies. Let us secure your AI infrastructure and maintain your competitive advantage in the age of intelligent systems.

Related Blog Posts

- Common Penetration Testing Findings and Remediations

- Privacy Considerations in AI Systems: Navigating the Complex Landscape of Data Protection in the Age of Artificial Intelligence

- Threat Modeling for Application Security: A Strategic Approach to Modern Cybersecurity

- Cryptography Basics for IT Security Professionals: A Comprehensive Guide for Modern Cybersecurity

- AI Ethics and Security: Balancing Innovation and Protection

- Legal Considerations for Penetration Testing in Australia

- Managing Security Debt in Software Development: A Strategic Approach to Long-term Security Excellence