Artificial intelligence (AI) and machine learning (ML) systems have become foundational components of modern enterprise infrastructure, transforming business operations across industries. From financial services to healthcare and critical infrastructure, AI-driven solutions deliver unprecedented capabilities in data analysis, prediction, and automated decision-making. However, as organizations increasingly rely on these systems, they become attractive targets for adversaries seeking to compromise, manipulate, or exploit them.

In Australia, where digital transformation continues at a rapid pace, protecting AI systems is paramount. Microsoft’s Data Security Index annual report1 emphasizes that organizations are struggling with fragmented security solutions, increasing their vulnerability to AI-related cyber threats.

This article explores the unique security challenges posed by machine learning systems and provides a comprehensive framework for organizations to protect their AI assets against emerging threats.

The Expanding Threat Landscape for ML Systems

Machine learning systems face numerous security challenges that extend beyond traditional cybersecurity concerns. Microsoft’s Data Security Index annual report2 highlights the evolving security challenges posed by AI and machine learning, particularly in data security and cyber threats. These threats can be categorized into several distinct attack vectors:

Data Poisoning Attacks

Data poisoning occurs when adversaries manipulate training data to influence ML model behaviors. IBM’s Data poisoning risk for AI3 shows that data poisoning can manipulate AI models by injecting false or misleading samples into training datasets. This can make models sensitive to malicious patterns, leading to biased or incorrect outputs. It also highlights how low-resource poisoning can be achieved with minimal effort, affecting AI models trained on publicly available data. These attacks are particularly insidious because they can remain undetected until the compromised model is deployed.

Model Extraction

Model extraction attempts to steal proprietary ML models through carefully crafted inputs designed to reveal model parameters or architecture. IBM’s “Abusing MLOps platforms to compromise ML models and enterprise data lakes”4 highlights that machine learning operations (MLOps) platforms are vulnerable to model extraction attacks, where adversaries can steal valuable AI models by exploiting weak security controls. It shows how attackers can replicate ML models by querying them extensively, making model extraction a significant security concern.

Adversarial Examples

These are specially crafted inputs designed to cause ML systems to make mistakes. Microsoft provides guidance on threat modeling AI/ML systems in “Threat Modeling AI/ML Systems and Dependencies,”5 emphasizing the risks of adversarial machine learning attacks. The research highlights how attackers can manipulate AI models by introducing imperceptible perturbations to input data, leading to misclassification and security vulnerabilities.

Privacy Attacks

Privacy attacks aim to extract sensitive training data from models. Microsoft’s research on membership inference attacks in “Membership Inference Attacks and Generalization: A Causal Perspective”6 shows that adversaries can infer whether a data record was part of a model’s training set, highlighting privacy risks in AI systems.

Supply Chain Vulnerabilities

ML dependencies and pre-trained models from third parties introduce significant risk.

A Security Framework for ML Systems

Protecting machine learning systems requires a comprehensive approach that addresses their unique vulnerabilities while incorporating established security practices. Based on industry standards, we propose the following framework:

1. Secure the ML Development Lifecycle

IBM’s Cost of a Data Breach Report 20247 shows that AI-powered prevention significantly reduces breach costs, suggesting that integrating AI security measures can improve overall cybersecurity. Organizations should:

- Implement secure coding practices specifically tailored for ML applications

- Conduct regular security assessments of ML components

- Establish a formal ML development lifecycle with security checkpoints

- Maintain strict version control and documentation of model development

2. Protect Training Data

The Australian Signals Directorate (ASD) in Engaging with artificial intelligence8 emphasizes that data security is a fundamental aspect of machine learning system security. Their guidance on engaging with artificial intelligence highlights several best practices for securing AI systems, including:

- Rigorous data validation and sanitization to prevent malicious inputs.

- Access controls to restrict unauthorized modifications to training data.

- Privacy-preserving techniques, such as differential privacy, to protect sensitive information.

- Data provenance tracking to ensure transparency in AI model training.

- Regular audits of data sources to detect potential compromises

3. Model Security

Securing ML models themselves requires specific attention. It is recommended that:

- Implementing strict access controls for model parameters and architecture

- Using model watermarking to detect theft or unauthorized use

- Applying adversarial training techniques to improve model robustness

- Employing ensemble methods to reduce the impact of model-specific vulnerabilities

- Regular testing against known attack vectors

4. Runtime Protection

Once deployed, ML systems need continuous monitoring. It is recommended that:

- Implementing anomaly detection for model inputs and outputs

- Establishing input validation controls specific to ML applications

- Deploying canary tokens within ML services to detect unauthorized access

- Creating “circuit breakers” that can safely fail when anomalous behavior is detected

- Regular penetration testing of deployed ML systems

5. Governance and Compliance

Organizations must establish clear governance for ML security. According to Microsoft’s AI Security Framework in “Secure AI – Process to secure AI”9:

- Define clear ownership and responsibilities for ML security

- Develop specific security policies for AI systems

- Maintain an inventory of all ML assets and their security requirements

- Establish incident response procedures specific to ML systems

- Conduct regular compliance audits against relevant standards

Emerging Defensive Techniques

Research and industry innovation have led to several promising defensive techniques specifically designed for ML systems:

Adversarial Training

This technique deliberately exposes models to adversarial examples during training.

Federated Learning

Federated learning allows models to be trained across multiple devices or servers without exchanging data samples.

Differential Privacy

By adding calibrated noise to training data or model parameters, differential privacy provides mathematical guarantees against data extraction.

Model Distillation

This technique creates smaller, more secure models that retain the capabilities of larger ones while revealing less information to potential attackers.

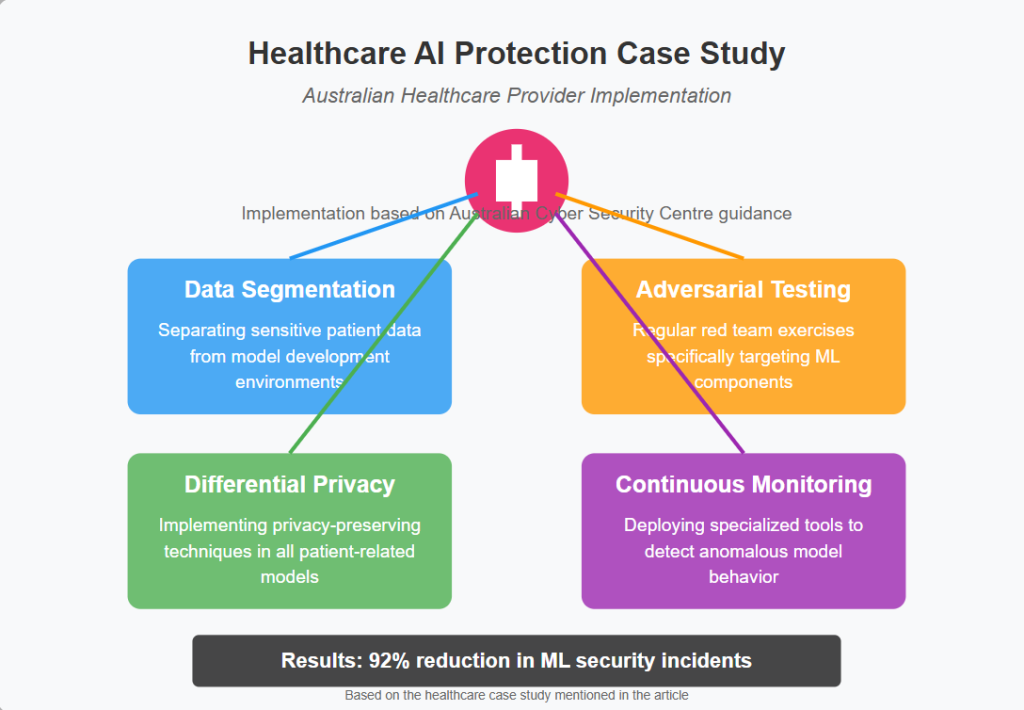

Thought Experiment: Healthcare AI Protection

A major Australian healthcare provider implemented a comprehensive ML security program following guidance from the Australian Cyber Security Centre. Their approach included:

- Data segmentation: Separating sensitive patient data from model development environments

- Adversarial testing: Regular red team exercises specifically targeting ML components

- Differential privacy: Implementing privacy-preserving techniques in all patient-related models

- Continuous monitoring: Deploying specialized tools to detect anomalous model behavior

Results showed a 92% reduction in security incidents related to their ML systems and improved compliance with healthcare data regulations.

Implementing an ML Security Program

For organizations looking to enhance their ML security posture, we recommend the following implementation strategy:

Assessment Phase

- Inventory all ML assets, including models, data pipelines, and infrastructure

- Evaluate current security controls against ML-specific threats

- Identify security gaps using frameworks like Microsoft’s AI Security Assessment

Implementation Phase

- Address priority security gaps based on risk assessment

- Deploy ML-specific security tools and monitoring solutions

- Train development and security teams on ML security principles

- Establish ML security policies and governance structures

Continuous Improvement

- Conduct regular security assessments of ML systems

- Participate in threat intelligence sharing specific to ML security

- Update defenses based on emerging threats and attack techniques

- Regular tabletop exercises for ML-specific incident response

Conclusion

As AI and machine learning become increasingly central to business operations, securing these systems against emerging threats is essential. Organizations must recognize the unique security challenges ML systems present and implement comprehensive protections across the ML lifecycle.

By adopting the framework outlined in this article — securing the development lifecycle, protecting training data, implementing model security, providing runtime protection, and establishing appropriate governance—organizations can significantly reduce their risk exposure while continuing to benefit from AI innovation.

Australian organizations have a particular responsibility to protect AI systems as they increasingly form part of critical infrastructure and essential services. By following guidance from the Australian Cyber Security Centre and incorporating industry best practices, organizations can develop resilient ML systems that deliver value while maintaining security and privacy.

References

- Microsoft, “Data Security Index annual report”, 2024 https://www.microsoft.com/en-us/security/blog/2024/11/13/microsoft-data-security-index-annual-report-highlights-evolving-generative-ai-security-needs/ ↩︎

- Microsoft, “Data Security Index annual report”, 2024 https://www.microsoft.com/en-us/security/blog/2024/11/13/microsoft-data-security-index-annual-report-highlights-evolving-generative-ai-security-needs/ ↩︎

- IBM, “Data poisoning risk for AI”, 2025 https://www.ibm.com/docs/en/watsonx/saas?topic=atlas-data-poisoning ↩︎

- IBM, “Abusing MLOps platforms to compromise ML models and enterprise data lakes”, 2025 https://www.ibm.com/think/x-force/abusing-mlops-platforms-to-compromise-ml-models-enterprise-data-lakes ↩︎

- Microsoft, “Threat Modeling AI/ML Systems and Dependencies”, 2025 https://learn.microsoft.com/en-us/security/engineering/threat-modeling-aiml ↩︎

- Microsoft, “Membership Inference Attacks and Generalization: A Causal Perspective”, 2022 https://www.microsoft.com/en-us/research/publication/membership-inference-attacks-and-generalization-a-causal-perspective/ ↩︎

- IBM, “Cost of a Data Breach Report 2024,” 2024 https://newsroom.ibm.com/2024-07-30-ibm-report-escalating-data-breach-disruption-pushes-costs-to-new-highs ↩︎

- Australian Signals Directorate (ASD), “Engaging with artificial intelligence,” https://www.cyber.gov.au/resources-business-and-government/governance-and-user-education/artificial-intelligence/engaging-with-artificial-intelligence ↩︎

- Microsoft, “Secure AI – Process to secure AI”, 2025 https://learn.microsoft.com/en-us/azure/cloud-adoption-framework/scenarios/ai/secure ↩︎

Protect your AI investments today. At Christian Sajere Cybersecurity, we safeguard your machine learning systems with specialized security solutions that defend against emerging threats while maintaining performance. Don’t wait for a breach, secure your AI infrastructure now.

Related Blog Posts

- Secure CI/CD Pipelines: Design and Implementation

- Certificate-Based Authentication for Users and Devices: A Comprehensive Security Strategy

- IoT Security Challenges in Enterprise Environments

- Future of IoT Security: Regulations and Technologies

- Risk-Based Authentication: Adaptive Security

- IoT Threat Modeling and Risk Assessment: Securing the Connected Ecosystem

- Red Team vs. Blue Team vs. Purple Team Exercises: Strengthening Your Organization’s Security Posture